Imagine the following scenario or something close to it:

- You have an IPSEC VPN to another network from yours.

- There are some CSV (or other) files on a file share that you need to access.

- The computer owning the file share is not a member of your Windows domain.

- For some reason (just pick one) the idea of installing a FTP service or other thing on the remote computer is not an option.

This presents a problem because, unless the person who owns the remote computer is willing to seriously compromise security (and you have the guts to ask him to do so), you aren’t getting to those files using SSIS – at least not in a completely automated fashion. Or are you?

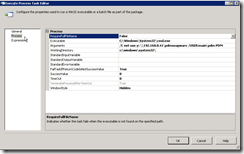

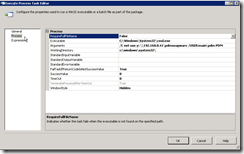

Using SQL Server Integration Services, you can access a remote file share with a username and password provided by the remote computer owner while SSIS is running as a scheduled task using the SQL Agent – completely automated. Check out the following screen shot.

The rest of the SSIS package is cut off as it is irrelevant to our discussion. We are interested in, specifically, the Execute Process Task. Let’s double-click on that task to see what’s up.

In the execute process task we are doing something I am certain most of you have done before – use the command interpreter – cmd.exe – to map a network drive using the NET USE command. For those that need a refresher on the NET USE command, visit this TechNet web page.

Let’s go through the fields to see what we have.

- RequireFullFileName – False

Indicates whether the task fails if the executable is not found in the specified path. We aren’t worried about this since we know CMD.EXE is in %windir%\system32.

- Executable – c:\windows\system32\cmd.exe

The fully qualified path to the executable you want to run. I assume you have installed Windows to the default directory. Use %windir% if you are not sure or have Windows installed some place else. So that would be %windir%\system32\cmd.exe.

- Arguments – /C net use y: machineIP\sharename /USER:username password

Specifies the arguments for the executable. In this case, the NET USE command and its arguments. Also, notice that we are throwing the “/C’” switch to cmd.exe. That means to carry out the command specified by string and then terminate. We don’t want a command prompt lingering around after we are done. Basically, do your job and leave is what we are saying.

- WorkingDirectory – c:\windows\system32

Specifies the working directory of the executable. Set to the path that cmd.exe is found in. Use %windir% if you are not sure or have installed Windows elsewhere.

- FailTaskIfReturnCodeIsNotSuccessValue – True

If we get a failure code for the return value of the command, then fail the task. You, in most cases I’m sure, want this to be true.

Obviously, we must spell out the success value to look out for. CMD.EXE usually returns a value of 0 if a command is successful.

It should take no time at all to map a drive. The default value is fine.

Remember, we are running this fully automated. You probably will not even be logged on the computer when this SSIS package runs. Therefore, you do not want a Window.

Once this task runs in SSIS, you should then be able to access any files in the remote file share. Do keep in mind that what you can do with those files is up to the remote administrator. If you cannot write to the files and need to, you may need to ask the remote administrator for write privileges. Also, accessing this drive will work as long as the SSIS package is executing. Once execution of the SSIS package is complete, the file share access goes away as it is not persistent.

I’d be interested to know if this helps anyone.

James