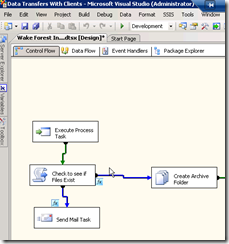

Let’s say you are transferring data from the database of one program to the database of another program. The problem is that one of the fields, say it’s a field called “usedescription” that is a data type of DT_NTEXT, has HTML in it because the program used that HTML formatting to display the text a certain way on it’s screen. However, the new program doesn’t need that HTML and perhaps that HTML even causes issues. So, how do you move the data from one database to the other and properly remove the HTML?

In SSIS, you can use the script component and the HTML Agility Pack. The HTML Agility Pack is a free code library that you can use to parse HTML (or remove it as we are doing). First, go to the following website to download the library:

http://htmlagilitypack.codeplex.com

Note that you can now get the library via Nuget.

Once you’ve downloaded the library to your downloads folder and unzipped it, note that the HTML Agility Pack contains sub-folders for the various versions of .Net and even WinRT and Windows Phone. Since we are using SSIS on SQL Server 2014, copy the files in the .Net40 directory to the C:\Windows\Microsoft.NET\Framework\v4.0.30319\ directory of the server that will be running your SSIS packages. Next, open up your script component and set it in your data flow and add the field in question to the list of inputs (don’t forget to set it to Read/Write). Click on Edit Script and when the code editor comes up add the HtmlAgilityPack.DLL to your list of references (you may have to browse for it to add it).

You will also need to add the namespace: using HtmlAgilityPack;

Here is the code in the script component with comments along the way (note that I took out the stuff we don’t need):

When running our SSIS package, the HTML Agility Pack removes unwanted HTML quite nicely. Check out our before and after screenshots:

Before:

After:

Obviously, there is more clean-up to be done. However, getting the HTML out was a good first step.

JamesNT